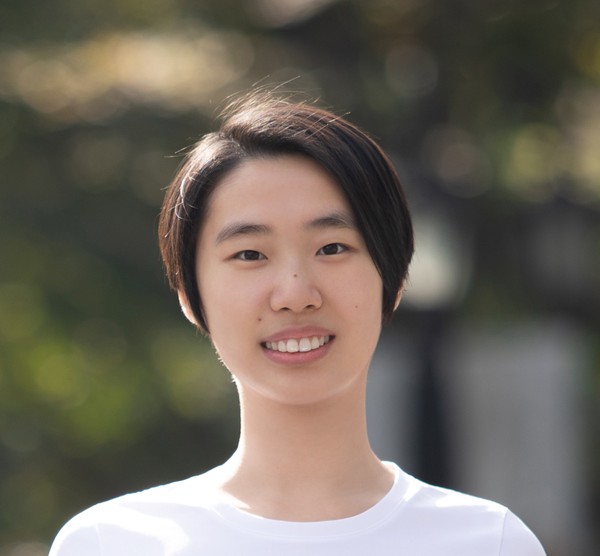

Zhijing Jin (Max Planck Institute for Intelligent Systems, University of Toronto)

16:00 - 17:00 UTC 11 September 2025

Zhijing Jin (she/her) is an incoming Assistant Professor at the University of Toronto, and currently a postdoc at Max Planck Institute in Germany. She is an ELLIS advisor and will also be a faculty member at the Vector Institute. Her research focuses on causal reasoning with LLMs and AI safety in multi-agent LLMs. She has received three Rising Star awards, two Best Paper awards at NeurIPS 2024 Workshops, two PhD Fellowships, and a postdoc fellowship. More information can be found on her personal website.